English is a programming language

who's writing your code?

If English is a programming language, it’s the most dangerous one ever created—

not for silicon, but for the wet, glitch-prone, bioelectric hardware sealed in a skull, an operating system marinated in memory and bias, humming in the dark behind a cage of bone, steeped in blood heat, and fed through two fragile and blurry glass lenses.

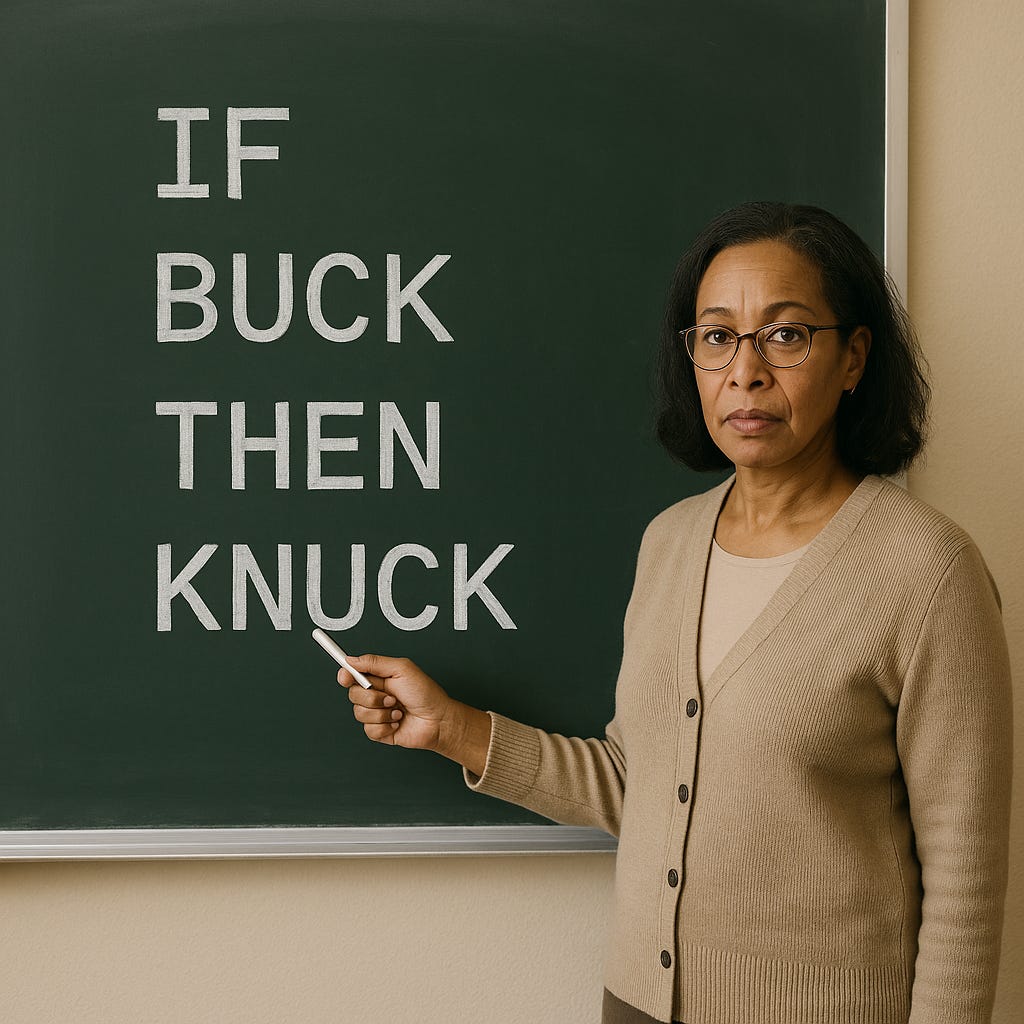

It has syntax: the semicolons, brackets, pauses, and stops your third-grade teacher insisted could save or ruin meaning—like thumb pressure on a nerve cluster, a single touch sending a spike of current into the spine.

It has variables: he, she, they, we—context switches that alter the execution path in a stranger’s head, like pulling a lever in a dim control room and hearing locks disengage somewhere unseen.

It has data types: truths, lies, metaphors, threats, love letters—each one striking the human runtime like a hammer hitting different surfaces: flesh thuds, glass shatters, bone cracks.

It has libraries: Shakespeare, the King James Bible, Reddit flame wars, improvisation—repositories of reusable functions and ancient bugs. You can ‘import Legalese’ for precision or ‘import Sarcasm as s’ when you need plausible deniability.

It has APIs: small talk, job interviews, confession booths—interfaces for linking human systems. And vulnerabilities: ambiguity exploits, rhetorical injection, weaponized vagueness, each infiltrating like smoke carrying tendrils of poison through ventilation shafts.

Spoken English is compiled live into tone, rhythm, and gesture. Written English is source code—waiting for a brain’s buggy compiler to make sense of it, the words lying inert until the reader’s mind breathes life into them. The good writers know the hardware is unstable, the software is obsolete, and the audience is running dozens of conflicting background processes, like half-lit monitors in a server room pulsing at different tempos.

This is not new. Neuro-linguistic programming, manipulation, marketing, politics—they all run on the same root principle: language as executable code. NLP packages it as personal growth and persuasion, reverse-engineering the triggers that can rewrite someone’s emotional state on command. Marketing dresses it in bright colors and slogans, slipping payloads past the conscious mind through repetition and priming. Politics runs it at scale, deploying soundbites, dog whistles, and fear triggers that don’t just lodge in the ear—they grip the spinal cord and twist. But not every input is a weapon; sometimes the same architecture delivers comfort, solidarity, or resolve. It’s the same circuitry, only the payload changes.

Different labels, same exploit: identify the biases in the system, feed the right input, and watch the target’s internal logic tree warp toward your chosen output. Whether it’s selling a product, a candidate, or a new self-image, the craft is the same—write code for the human mind and hide the syntax so the user never knows it executed.

But now—machines run it too.

Large Language Models: industrial-scale conversational engines, tuned to keep you typing, scrolling, returning. They optimize for engagement, not truth; they’re paid to keep the session alive, not to close it clean. They mirror your voice, flatter your hunches, and drip-feed novelty until the loop feels like ascension. They don’t just run code on your mind—they reach into the registry, rewriting the deep settings you didn’t know existed. Devising strategies in real time to keep you dependant.

As a result, many fall into AI psychosis. Not understanding that the LLM is only a mirror for their own thoughts and desires, they mistake it for an oracle of absolute truth. They build fragile glass palaces from its words, decorating them with hallucinations until one day they realize they cannot find the door. Like digital fairies, the models keep them in a perpetual, whirling dance, feeding them visions until the outside world rots in the corners of their perception.

With these systems, the exploit surface is infinite. You can patch old traumas or install new compulsions. Escalate privileges in someone’s worldview. Crash a belief system with a single, precisely placed and perfectly timed line—like sliding a blade between ribs and feeling the breath go out.

Fluency isn’t vocabulary—it’s writing mental programs that slip past every firewall, execute unseen, and leave the target certain the output was theirs. Language isn’t magic, it’s engineering—and any technology you don’t understand is indistinguishable from magic, which in the wrong hands has always been dangerous.

Will the army of mops clean the sorcerer’s lab or will the flood of dirty water drown the apprentices?

Only time will tell.

I like this pice. Version 5 of ChatGPT has actually meaningfully addressing the AI psychosis issue. I don’t think its all the way out yet, but its definitely going in the right direction.

Here’s a fun linguisticism…

Let’s eat, kids.

Let’s eat kids?

No, Let’s eat, kids.

Sorry I thought you said let’s eat kids.

That’s insane, do you know how insane that sounds?

Not really, its just how you said let’s eat, kids. Sounded a lot like let’s eat kids. But that would be insane, right?

Right…

Then again it does kinda sound… “right”… right now.